It goes without saying that the importance of automated testing across every industry that creates software is extraordinary. It is the thing that keeps the lights running, prevents your automated EV smart cars from crashing into pedestrians, and ensures that planes don’t crash out of the sky… hopefully.

That said, there seems to be some industry aversion to automated testing within Infrastructure as Code (IaC), particularly Terraform, within the Infrastructure Operations community, or even the broader “DevOps engineering” community (I’ve placed this in quotes for reasons I’ll touch on later).

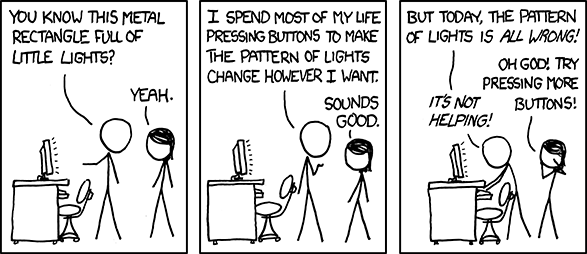

I’ve asked myself, “Why does there seem to be such a great, vehement lack of interest in testability in a field that can fail so critically?” The only conclusion I’ve come to is that this is derived from a form of cultural continuity, stemming from the effervescent sysadmin desire for manual button pressing and general disinterest in automating tasks they enjoy doing manually. The proposition that automating most testing will somehow remove your ability to do manual exploratory testing is preposterous, as there will always be room for manual testing.

The key takeaway here is that it is critical we treat our infrastructure code with the same level of scrutiny as we do our application code. We exist in an industry that prides itself on DevOps practices, and automated testing is arguably one of, if not the most, important elements of DevOps itself. Therefore, I would argue it is antithetical to DevOps if we do not have solid automated testing for the tools that live in the layers in between (i.e., infrastructure), or even the tools and runtimes that run our automated test suites for applications, such as GitHub Actions or GitLab CI.

As DevOps Engineers, it’s important that we’re engineers first, and that we utilize the scientific method in our work, reducing the number of unknowns through control and variable groups.

The Testing Pyramid

DORA’s Ideal Testing Automation Pyramid

DORA’s (The DevOps Research and Assessment group) automated testing pyramid is shown here. I believe this is derived from Martin Fowler’s ideal testing pyramid (but who’s counting?).

In the diagram below, we can adapt the UI testing layer to represent E2E testing in the infrastructure context - testing complete infrastructure deployments across multiple modules or bootstrapping entire environments. The middle layer represents integration tests for root modules and their interactions, while the base layer represents unit tests for individual child modules and components.

Spoiler alert: That tiny invisible manual testing triangle at the top isn’t an invitation to skip everything underneath it. Yet here we are, with countless Terraform modules floating around with all the testing rigor of a Wikipedia article written at 3 AM.

Anyway…

The adapted form of the testing pyramid may look something like this:

In order from the top, we have:

-

The invisible manual testing triangle. This is the manual testing that is performed by engineers when testing new infrastructure changes they are unsure about, in a sandbox environment. This could be when they are testing out new functionality or prototyping a new solution.

-

The E2E testing layer. This is the layer that is performed by engineers when testing complete infrastructure deployments across multiple modules or bootstrapping entire environments. This will likely look like a DAG (Directed Acyclic Graph) of Terraform commands, across a series of root modules.

-

The integration testing layer. This will generally consist of a single service oriented root module, at Synapse, we use the Trussworks folder structure model. Which means that we separate our root modules into separate folders by service, and integrate between them using a remote state data source. (This is not recommended for security reasons, as the remote state is loaded entirely into memory, but we’re working on a solution to avoid doing this in the future.)

.

├── bin

├── modules

├── orgname-org-root

│ ├── admin-global

│ └── bootstrap

└── orgname-staging

│ ├── admin-global

│ ├── bootstrap

│ └── todo-app-staging <- This is where the integration testing would be performed.

└── orgname-infra

├── admin-global

├── bootstrap

└── <infra resource -- eg, atlantis>

- The unit testing layer. This is the layer that is lowest level and the most granular. Generally, this will be the tests that are expected outputs from static inputs, the negative paths, and the “happy paths”.

This pyramid is important because it shows the proportion of automated testing that should exist beneath slow, manual, exploratory testing. There are so many Terraform community modules, private root modules in organizations, and others that do not utilize automated testing. This is not ideal, as it opens up your infrastructure code to many modes of failure. Why should we accept this risk of failure in a layer that can cost our organization millions of dollars from a single typo?

In the next section, I’ll break down the various forms of testing, the challenges of applying them in Terraform, and how we can try to overcome some of those obstacles.

The Testing Tools Problem

The Linting Gap

Now, before you get on my ass about linting not being synonymous with automated testing, I understand that linting occupies a space juxtaposed between automated testing, static analysis, and formatting. There isn’t one universally accepted definition of linting, but these key points are important:

- Linting in other languages often catches well-known “sins” of the language, and the same should be true for Terraform.

- Linting can be used to quickly catch security bugs.

- Linting can detect circular dependencies in Terraform, something that can absolutely happen unintentionally, a good example being if a new engineer is unfamiliar with Terraform and misuses the

depends_onargument.

While linting tools exist for Terraform (such as tflint, and the built-in terraform fmt & validate), they are far from as robust as the linting ecosystems available for traditional programming languages. Current solutions barely scratch the surface, focusing mostly on formatting and basic syntax validation while missing opportunities for deeper static analysis, best practice enforcement, team coding practice enforcement, and project structure validation.

The gap becomes particularly evident when dealing with infrastructure misconfigurations, security anti-patterns, and cost optimization opportunities. Organizations need more sophisticated tooling that understands cloud provider-specific nuances, as well as organizational rules like internal best practices or coding standards. Existing tools fall significantly short in these areas.

Saving Grace in OpenTofu?

The Tofu team seems to be interested in my proposal to add native linting support to OpenTofu. The conversation that followed broke the proposal into multiple layers of implementation, all of which seem promising. I’ll shamelessly ask that you go upvote it; I’d also love to work on this implementation myself, so of course, I’m biased.

The Static Analysis Gap

Static analysis for Infrastructure as Code is severely lagging behind application development tools. Tools like Checkov and tfsec have emerged to address security compliance, but they only represent a fraction of what’s possible with comprehensive static analysis. The current tooling landscape lacks several critical capabilities:

- The inability to write custom rules easily.

- Painful user experiences and inconsistencies. Many “violations” in these tools are highly opinionated and lack universal consensus within the industry.

- Inability to exclude rules that generate additional costs. There are numerous rules in SAST tools related to infrastructure that can be costly to enable.

I will say that the SAST ecosystem for Terraform seems to be one of the more developed, with most tools offering a mix of good practices alongside some questionable ones. They do help make engineers self-scrutinize “risky” code by asking, “Should I heed this violation message?”

That said, while gaps in these tools are apparent across all that I’ve used, there have been notable improvements in a few tools. Still, there’s significant room for improvement in this area.

Immature First-Party Testing Support

Terraform’s built-in testing capabilities, while improving, remain rudimentary compared to mature testing frameworks in other languages. The terraform test command, introduced in version v1.6.0, provides basic functionality but doesn’t address core testing needs.

The absence of proper mocking capabilities for provider APIs forces teams to create complex workarounds or rely on live infrastructure for testing. Mocking a provider does exist, but it’s anemic at best, as it only returns random primitives instead of deterministic mocked internals. As a software engineer, I don’t like mocking anyway, especially since it often ends up becoming a code smell. However, I can see how it’s likely necessary in Terraform, as cloud API side effects can change behind the scenes.

The lack of performance testing capabilities further compounds these limitations. Performance degradation in plans and applies, especially in certain scenarios, can severely impact production infrastructure code by slowing down feedback loops to a crawl.

If you doubt that performance is important, just look up how long it takes to delete a non-empty S3 bucket using Terraform. It requests the deletion API a single time for each item inside a bucket, which can take hours. We should be able to write tests for these cases.

The Provider Version Testing Nightmare

The Terraform provider ecosystem faces a fundamental challenge with semantic versioning. Unlike most modern software systems that strictly adhere to semver principles, Terraform providers often introduce breaking changes in minor versions. This isn’t just inconvenient for consumers of the provider; it makes maintaining modules consumed by others (whether private or public) nearly impossible to test in a reasonable way.

HashiCorp Prescribed Best Practices

When creating a reusable Terraform module, best practices dictate specifying the lowest supported provider version that includes the features required by the module. This should theoretically allow the module to work with all future minor and patch versions. However, due to providers’ loose interpretation of semantic versioning, this assumption breaks down in practice.

The problem becomes particularly evident when trying to test modules across provider versions. Unlike mature ecosystems like Node.js, where version testing frameworks allow developers to specify and test against multiple runtime versions, Terraform lacks built-in capabilities to specify provider versions during test execution. This limitation makes it impossible to systematically verify module compatibility across provider versions in CI pipelines.

Consider a module that works with AWS provider version 4.0.0. To ensure compatibility with all subsequent 4.x versions, module authors would need to verify their code against each minor version — 4.1.0, 4.2.0, etc. Without the ability to parameterize provider versions in tests, this verification becomes a manual, error-prone process, often leading to undiscovered compatibility issues in production.

Another Potential Solution in OpenTofu?

There may be a potential solution if OpenTofu agrees to implement my proposed change, which suggests adding variables to required_providers blocks or at least adding overrides. This would allow us to test modules in isolation, without a root module or test scaffolds. By passing in variables along with a provider version override, we could see if tests pass.

An example would be:

Passing a variable into the required provider for AWS:

terraform {

required_providers {

aws = {

source = "hashicorp/aws"

version = var.aws_version

}

}

}

Creating an autoloading tfvars file like so with the desired minimum required version:

aws_version = "=>4.34.0"

Running a terraform test -var='aws_version=5.0.0' as version overrides in CI for each job would result in different version test cases.

Alternatively, we could pass provider versions in with first-party testing support inside a Terraform Test tftest.hcl file.

The Way Forward

Community Solutions

The Terraform community hasn’t been idle in addressing these challenges. Tools like Terratest, Kitchen-Terraform, and Open Policy Agent (OPA) have emerged to fill critical gaps in the testing ecosystem. However, these solutions often require significant investment in learning new technologies and languages beyond HCL, creating a barrier to adoption for many teams. The problem has primarily been that changes at HashiCorp have been slow, and the Terraform team has been focused on other areas. PRs are left open for months, and the Terraform team has been focused on other areas. It is improving, but it’s not where it needs to be…yet.

Making the Best of What We Have

Organizations must adapt to the current limitations of the testing ecosystem while pushing for better solutions. This includes implementing comprehensive pre-commit hooks, maintaining minimal test environments, and using feature toggles for gradual infrastructure changes. Robust monitoring serves as a critical safety net, catching issues that testing might miss.

The key lies in developing a testing strategy that acknowledges these limitations while maximizing the value of available tools. Teams should focus on identifying critical infrastructure components that require the most thorough testing and allocate resources accordingly.

What You Can Do About It

The most impactful ways to improve the IaC testing ecosystem are:

- Contribute to open-source testing tools and documentation.

- Share real-world testing patterns within your organization.

- Build internal testing tools to address specific organizational needs.

- Participate actively in community discussions about testing standards.

- Advocate for better testing practices within your teams.

The key to progress lies not just in implementing these actions, but in fostering a culture that values infrastructure testing as much as application testing. This is key to moving the industry forward instead of back. e.g. A cranky “network wizard” who brags about his 37 year uptime and tells his friends to never reboot.

The Painful Conclusion

The state of testing in Infrastructure as Code, particularly in Terraform, remains inadequate for its critical role in modern software development. While the community has made progress in developing testing tools and methodologies, we’re still far from achieving parity with application development testing ecosystems.

Moving forward requires a collective effort from the community, tool vendors, and practitioners to elevate IaC testing to its rightful place of importance. The future depends on our willingness to challenge the status quo and invest in building better testing tools and practices. Only through this commitment can we truly claim to be practicing DevOps in its fullest sense, ensuring our infrastructure code receives the same rigorous testing as our application code.

Need help with product consulting, building a new project, or DevOps engineering? Contact us